Long Science

If you’re not already familiar (and, frankly, are the kind of person who is weird enough to be subscribed to Macroscience), I highly recommend that you immediately stop what you’re doing and visit the Wikipedia page for “long-term experiment”. Then, check out Sam Arbesman’s collection of Long Data. Then, Michael Nielsen’s list of long projects.

If you’re anything like me, the scientific efforts that appear on these lists are deeply compelling. That’s due in part to their relative rarity. It’s hard to find cases of an experiment or a data collection effort that grinds away on the scale of decades, and easy to appreciate the uncommon dedication and focus they represent.

These efforts are also compelling on an epistemological level. They suggest that there is a wealth of valuable knowledge to be gleaned from even fairly humble explorations that operate over a long, long period of time.

Famously, there’s the Framingham Heart Study, a longitudinal study ongoing since 1948 that has yielded major insights on cardiovascular health. But there’s also things like the Park Grass Experiment, an effort looking at fertilizer and hay yields ongoing since 1856 that has produced advances in our thinking about biodiversity and natural selection.

These two contrasting characteristics of Long Science – its relative rarity and its demonstrated value – suggest a question. Simply put, are we producing enough Long Science?

I suspect that we generate less Long Science than is optimal. Part of the problem is that our scientific economy has given rise to a circumstance where “Long” is synonymous with “Big”. The longest-term projects that our funding machinery supports tend to precisely be big-dollar efforts that go to giant institutions with large team science operations.

But Long Science transcends the heavy machinery of Big Science. One of the most striking things about efforts like the Cape Grim Air Archive or the William James Beal germination experiment is simply how small-scale many very long-term scientific efforts are in practice. The simple, low-cost collection of datapoints over the course of decades is wildly cheap compared to the value it might potentially yield.

Oddly, it is precisely these inexpensive bets that appear to not be widely funded in the scientific economy and are scarce enough that eccentric people on the internet are motivated to compile obsessive lists of them. This suggests to me that there may be significant latent experimental supply – and scientific progress – that our society effectively leaves on the table. We could be productively expanding long-term, small-scale scientific exploration to inexpensively generate valuable knowledge.

What kind of lever could we use to accomplish such a thing? One approach could be to popularize a style of grant that I call TILT – tiny investment, long term. Under a TILT grant, a foundation or government agency would award grantees a relatively small stream of money spread out over an extremely long period of time, say twenty or thirty years.

You can tell that we likely have underinvested in exploring this area because the entire notion of TILT can strike our everyday intuitions around science funding as absurd. Who would be fool enough to agree to do something for so long with so little financial return?

Who indeed. TILT is interesting precisely because of the kinds of institutions and research it would repel. Big organizations with heavy administrative costs and payroll burden. Research with major capital requirements. Scientists with short-term horizons for their research agendas.

The remaining contenders who would be attracted to TILT funding strike me as a very interesting cohort that produces research difficult to source elsewhere from within the economy of science. Lightweight, nimble organizations with low administrative baggage. Research taking low-cost approaches to traditionally high-cost problems. Scientists with expansive, long-term horizons for their work. This is a slice of the scientific ecosystem a policymaker might very well want to have the ability to accelerate and direct as national needs arise. TILT provides an instrument to do so.

TILT might create positive collateral effects on the behavior of scientific actors beyond simply shifting the types of experiments being produced. TILT funding is, in effect, an ultra-dependable asset class that can create long-term sustainability for an organization. Institutions and labs could work to win a bundle of TILT grants to create a financial bedrock, giving them the long-term stability to be more aggressive and risk-taking in their scientific work elsewhere.

Longevity also fosters its own behavioral patterns that we may want the ability to influence in science. Valuable process knowledge can be fostered and accumulated within stable, long-term projects. Maintenance itself is also a crucial skill, and the proliferation of truly long-term projects creates training grounds to build those operational muscles. Increasing TILT funding is potentially a way of driving the growth of these often tacit, hard-to-measure areas of human capital in science.

We should be experimenting with things like TILT. But the bigger point is that TILT is just one of a general class of macroscientific policy lever that bears further exploration: methods for shaping the relative time-horizon of scientific and technological institutions. We get very different types of innovation depending on whether or not our societal portfolio of bets on progress is targeting the next year or the next two decades. Building the tools to fine-tune these horizons will be a key building block of truly effective science policy.

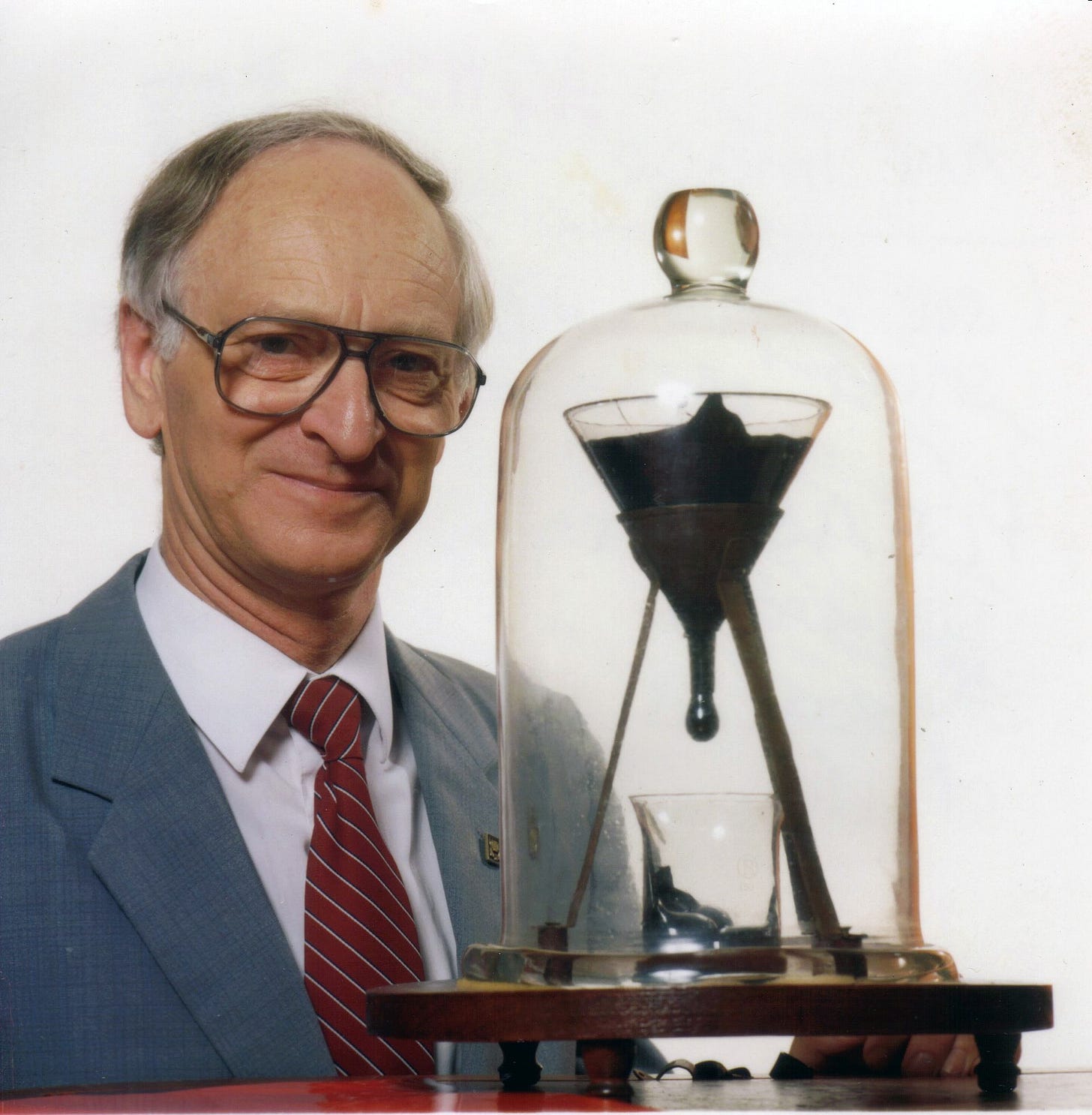

Thanks to Sam Arbesman, whose offhand comment about the University of Queensland pitch drop experiment (watch the live feed!) inspired this piece.

Another idea to increase the temporal scope of an experiment is to run it in parallel.

An effort like Sebastian Cocioba's plan to democratize and desktop-ify the LTEE experiment is a good example: https://experiment.com/projects/affordable-and-accessible-experimental-evolution-for-the-classroom