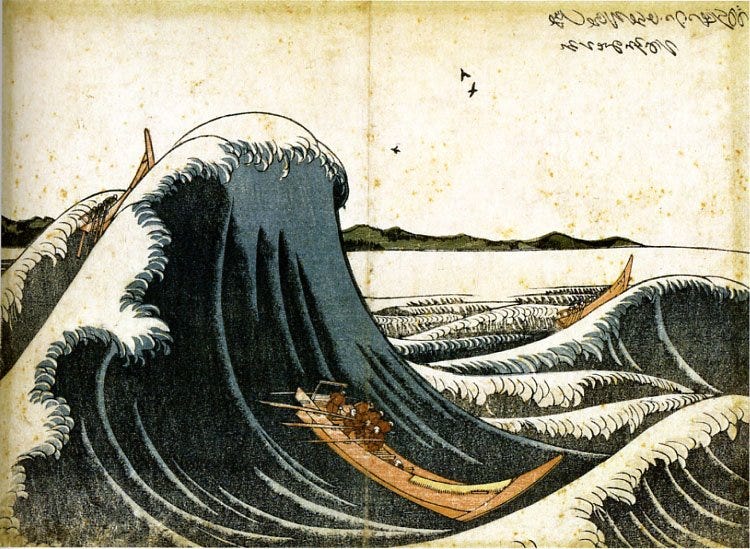

Funding Against the Tide

The last edition of Macroscience focused on the curious fact that many scientists and industry insiders considered mRNA vaccines, ex ante, to be a dead end for addressing the coronavirus pandemic. There was a substantial gap between what was believed to be plausible in a field, and what was in reality possible.

We should care about these moments where gaps exist between the plausible and the possible since they are moments where science may fail to seize critical opportunities. The mRNA case is particularly dramatic since it suggests an alternate history where society had the key tool for combatting the outbreak sitting right there on the shelf and failed to take advantage of it.

These situations are also of extreme relevance to the political economy of science because they pose a thorny question: under what conditions is outside intervention in a field’s research agenda – particularly against expert consensus – justified and desirable?

Most economists would obviously caution against throwing uncapped money toward technologies or approaches considered unpromising by a field. The underlying assumptions are that researchers know their business, and that fields are generally efficient at allocating limited resources across a wide range of potential research bets. Therefore in a typical setting, the standard operating procedure is to defer to expert guidance in the allocation of resources like funding.

The coronavirus case presents an exception to this general rule of governance. In a genuine emergency, the costs of delay and missing a critical opportunity can be so enormous that it is rational to “pull every lever.” By artificially pushing the frontier of the plausible to capture all that is possible, we erase the risks that expert consensus has somehow got it wrong.

But is emergency the only scenario under which intervention is justified? Certainly those following in the tradition of a Vannevar Bush might instinctively commit to such an austere vision of how the economy of science should be governed. But doing so would be misguided. Inefficiencies in science abound, particularly in early, precommercial domains where we lack the market signals that might help us robustly surface good work.

One major class of market failure worth exploring in this vein are situations in which research fields foreclose on certain nascent methodologies too early. Clusters of early negative results can cause experts to prematurely “fold” on an approach, considering it unviable even where further investment would have led to a breakthrough. There are echoes of this in the mRNA case. Some of the interviews that I’ve been conducting suggest that mRNA vaccines were considered particularly unpromising in the early 2010s in part because of a legacy of poor to middling results in DNA vaccines.

This bias may also work to forestall the revisiting of old failed approaches, even when external circumstances change to allow previously unworkable ideas to become workable. Neural networks were considered a stagnant and a fundamentally limited method for achieving artificial intelligence after a period of excitement in the 1960s. The subfield lingered for decades at the fringes of the field before returning again in force in the 2010s as massive data and parallel processing through GPUs proved out the power of the approach.

It’s true that both mRNA vaccines and neural networks ultimately got their moment in the sun. But, for me, these cases pose the question of how much sooner we could have accelerated to these breakthroughs. They also suggest that there may be other such viable pivotal technologies out there considered dead and buried by expert opinion.

It is worth emphasizing that premature folding is not due to some inherent cowardice or mystical psychological blind spot on the part of scientists. There are many structural reasons why researchers might choose to give up faster than they should.

For one, perverse profit incentives might curtail full exploration. One hypothesis that some interviewees have voiced to me on the mRNA vaccine project is that the profitability of traditional vaccines were so great that it hindered any committed investigation of alternative approaches with DNA that might threaten that business.

Similarly, mercurial and impatient funders might push academic researchers to drop explorations earlier than they should. One reason that neural networks research arguably collapsed in the 1960s was that the field failed to rapidly produce the kinds of world-changing outcomes that its backers and proponents hoped were right around the corner.

Finally, research norms themselves may accentuate loss aversion bias among scholars. Bhattacharya and Packalen (2020) have argued that an overemphasis on citations as a measure of impact has shifted research away from more exploratory, failure-prone projects and towards incremental work.

These structural problems are widespread, and at least provide good reason to suspect that “folding early” may be a recurring phenomenon across fields. In other words, the frontier of the plausible has a tendency to be drawn more narrowly than is optimal: a string of negative results can cause a field to quickly retreat before it is really shown that a given approach is genuinely unworkable. The end result is that we lose out on useful discoveries and the occasional game-changing advance like mRNA vaccines, sometimes for a decade or more.

This would seem to provide a clear justification for countering these tendencies by providing limited, countercyclical funding for research on potentially high-return ideas. Science funding institutions should deploy their resources to encourage a final burst of effort precisely as expert consensus is turning negative on an approach to a problem. Funders might commit to specifically backing replications of negative results in a field, systematically creating second chances for apparently failed ideas. More ambitiously, programs might be established to provide time-bound “last shot” funding for once promising, high-potential fields that see a declining period of citations and researcher participation.

Expert consensus will be frequently right, so there will be a cost to doing this. But routinely creating opportunities that hedge against the risks of early quitting will occasionally unlock breakthroughs that could more than pay for these bets.

Thanks as always to Santi Ruiz and Caleb Watney for their comments on earlier drafts of this piece. Thanks also to Matt Clancy and Ulkar Aghayeva, who pointed me towards some great literature relevant to the “folding early” problem.

Agree that its interesting to see how fields come to consensus on path-dependent decisions. Rickover arguably forced the entire field of nuclear reactors into pressure water reactors over some of the more exotic reactors being explored at the time (I'm certainly not in a position to judge if that was right or not, but simply the observation that at least one agentic government official was in a position to make such path-dependent funding). I've written previously about other examples of how government funding leads to different path-dependent technology develop, notably for quantum computing(https://charlesyang.substack.com/p/real-life-examples-of-path-criticality) but it would be interesting to find more examples

Proposals:

Perhaps making grants to people (as HHMI does) rather than for ideas solves this pretty well.

Make some grants based on a smaller pool of Yes votes. One way to do this is give committees a stack of grants and a number of Yes votes, then allow them to allocate more than one vote to a given grant.